Object Segmentation and Tracking Using SAM2 Model

Overview

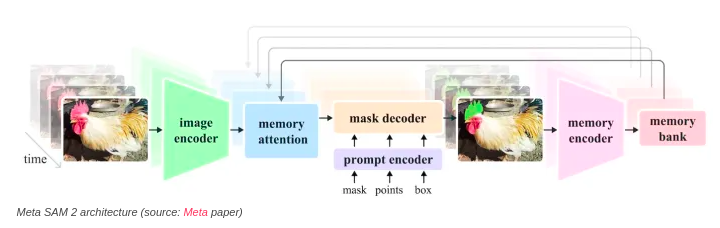

In this project, advanced object segmentation and tracking system using the SAM2 (Segment Anything Model 2) model was implemented. Object segmentation and tracking are critical tasks in computer vision, with applications ranging from video surveillance to autonomous vehicles. The SAM2 model represents a cutting-edge approach to segmentation, providing high accuracy and efficiency in segmenting objects in various environments. This project involved integrating the SAM2 model with a tracking algorithm to create a robust system capable of identifying and following objects across video frames in real-time.

Objectives

- High-Accuracy Object Segmentation: Utilize the SAM2 model to achieve precise segmentation of objects within images and video frames, irrespective of object scale, occlusion, or background complexity.

- Real-Time Object Tracking: Implement an effective tracking mechanism that follows segmented objects across multiple video frames, ensuring consistency and reliability in dynamic environments.

- Performance Optimization: Optimize the segmentation and tracking pipeline for real-time applications, focusing on minimizing latency and maximizing accuracy.

- Evaluation and Validation: Assess the system’s performance across various datasets and scenarios, using metrics such as Intersection over Union (IoU), tracking accuracy, and frame rate.

Technical Architecture

Input Data Preparation:

- The input data consists of video sequences or real-time video streams. Pre-processing steps include resizing, normalization, and augmentation to ensure compatibility with the SAM2 model and enhance model performance.

Object Segmentation Using SAM2:

- The SAM2 model is employed to segment objects in each video frame. SAM2 is capable of handling complex scenes, producing precise masks for each detected object.

- The model leverages deep learning techniques and attention mechanisms to focus on relevant parts of the image, ensuring high accuracy even in cluttered or dynamic environments.

Object Tracking:

- After segmentation, a tracking algorithm (e.g., SORT, DeepSORT, or a custom tracking mechanism) is applied to follow the segmented objects across subsequent frames.

- The tracker assigns unique identifiers to each object, maintaining consistency even as objects move or change appearance.

- The tracker uses the segmentation masks provided by SAM2 to enhance tracking accuracy, especially in cases of partial occlusion or when objects exit and re-enter the frame.

Post-Processing and Output:

- The system merges segmentation masks and tracking data to produce a coherent video output where objects are highlighted and tracked across frames.

- Visualizations include bounding boxes, segmentation masks, and tracking trajectories overlaid on the original video.

- The results are evaluated using relevant metrics, and outputs can be saved as annotated video files for further analysis.

Tech Stack

- Programming Languages: Python

- Frameworks and Libraries:

- SAM2 (Segment Anything Model 2): For high-accuracy object segmentation.

- OpenCV: For video processing, visualization, and basic image manipulations.

- PyTorch or TensorFlow: Backend for implementing and running the SAM2 model.

- Tracking Algorithms: SORT (Simple Online and Realtime Tracking), DeepSORT, or custom tracking algorithms for object tracking.

- NumPy/Pandas: For data manipulation, tracking data management, and analysis.

- Tools:

- Jupyter Notebooks: For development, experimentation, and prototyping.

- Docker: For containerizing the application, ensuring consistency across different environments.

- Git: For version control and project collaboration.

- FFmpeg: For processing and converting video files.

Conclusion

The integration of the SAM2 model for object segmentation with a real-time tracking algorithm resulted in a powerful system capable of handling complex video sequences with high accuracy. The project successfully demonstrated the strengths of the SAM2 model in segmenting objects under various challenging conditions, including occlusions, varying scales, and dynamic backgrounds. By combining advanced segmentation with robust tracking, the system provides a reliable solution for real-time applications, such as surveillance, autonomous driving, and video analytics.

The project’s success lies in its ability to accurately and efficiently segment and track objects in real-time, a task that is crucial for many computer vision applications. The modular design of the system allows for easy adaptation and scaling, enabling it to be extended to more complex scenarios or integrated with other models and techniques in the future. This project highlights the potential of advanced segmentation models like SAM2 in pushing the boundaries of what is possible in object tracking and vision-based automation.

For more information on how aiblux can help you with custom software solutions, contact us or explore our services.