Artificial Intelligence (AI) is reshaping industries at an unprecedented pace, offering unparalleled opportunities for innovation and efficiency. Yet, as AI systems grow more sophisticated, a critical challenge emerges: the lack of transparency in decision-making processes. This has led to a pressing need for Explainable AI (XAI), a technology that demystifies AI decisions and builds trust among users. At aiblux, we are dedicated to Client-Centric Software Solutions that bridge the gap between cutting-edge innovation and human understanding. In this guide, we delve into how Building Trust with Explainable AI can empower your business, foster confidence, and drive meaningful partnerships.

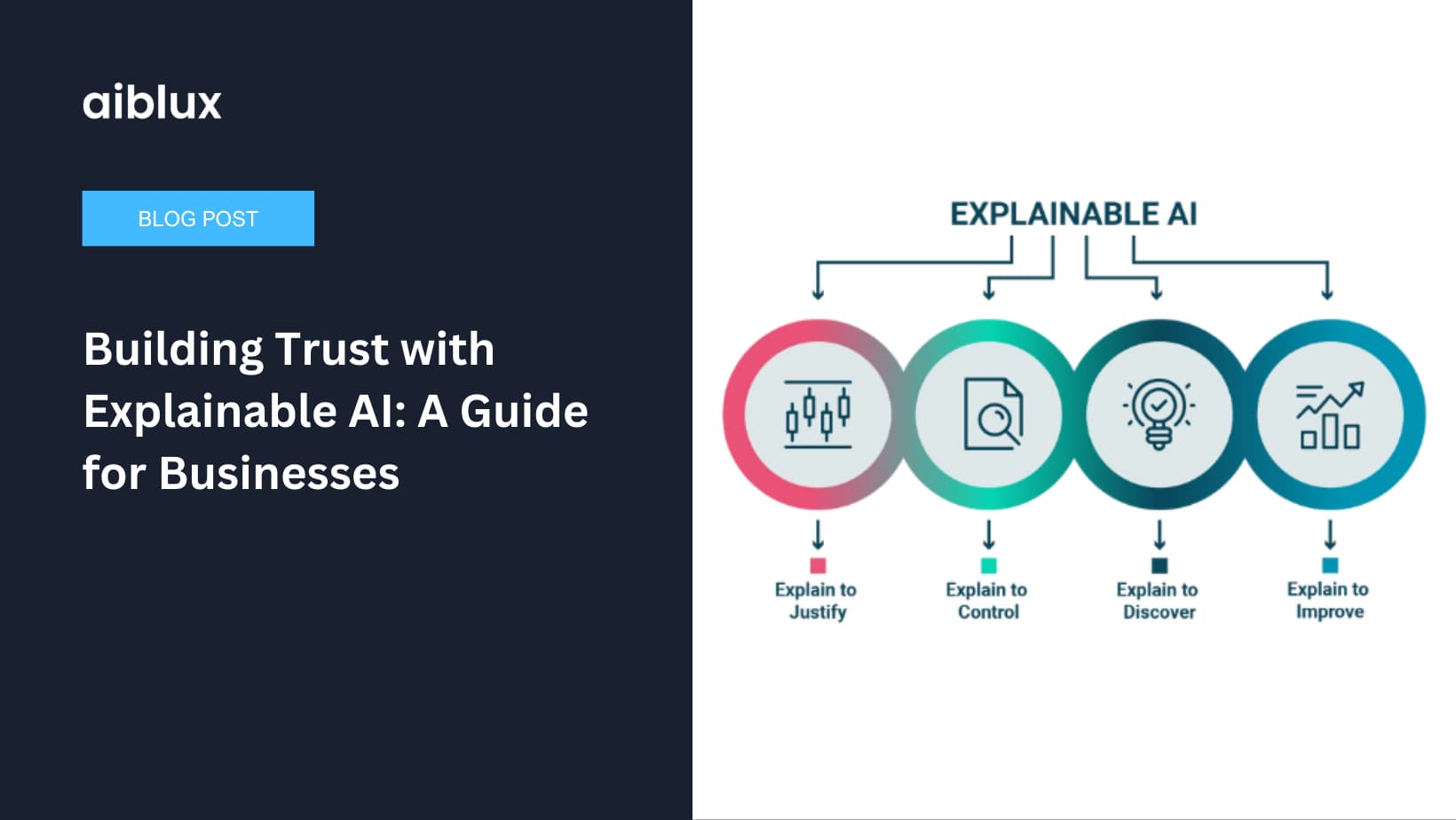

What is Explainable AI (XAI)?

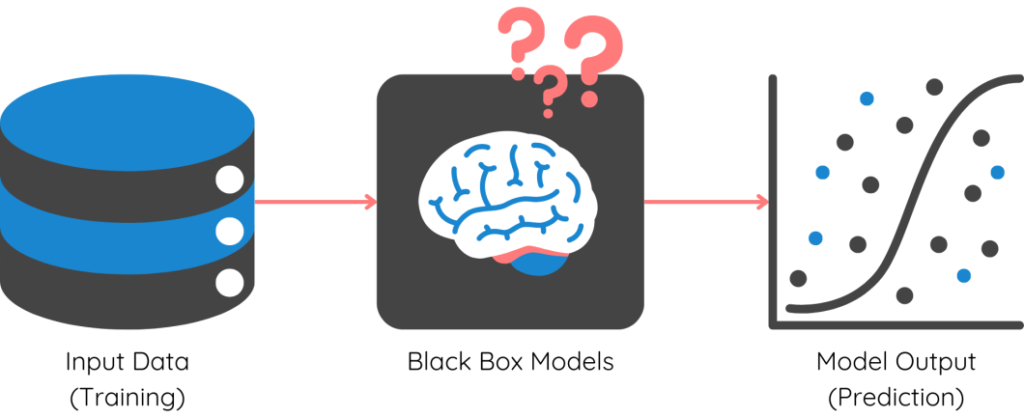

Explainable AI refers to AI systems designed to provide clear, understandable insights into their decision-making processes. Unlike traditional “black-box” AI models, XAI ensures that businesses can trace and interpret how conclusions are reached. This transparency is crucial in sectors like healthcare, fintech, and logistics, where accountability and compliance are paramount.

For example, if an AI system recommends a specific medical treatment, doctors need to understand the rationale behind it to ensure patient safety. Similarly, in fintech, XAI can justify loan approvals or fraud detection decisions, building confidence among clients and regulators.

The Evolution of Explainable AI

The concept of XAI has evolved alongside the increasing adoption of AI across industries. Early AI systems were designed for efficiency, often sacrificing transparency for accuracy. However, as AI began to play a more significant role in critical decision-making processes, the need for explainability became apparent. Today, XAI is a growing field, with researchers and developers working to create AI systems that are both powerful and transparent.

Why Trust Matters in AI Adoption

Trust is the foundation of any successful AI implementation. Consider the following scenarios:

- A healthcare provider relies on AI to diagnose patients but cannot explain how the system reached its conclusions.

- A fintech company uses AI to approve loans but cannot justify why certain applicants are rejected.

In both cases, the lack of transparency undermines client confidence and can lead to legal and ethical challenges. By adopting Explainable AI, businesses can ensure accountability, reduce risks, and build stronger relationships with their stakeholders.

The Human Factor in AI

One of the key challenges in AI adoption is the human factor. For AI to be truly effective, it must be trusted by the people who use it. This trust is built on transparency and understanding. When users can see how an AI system works and understand its decision-making process, they are more likely to trust and adopt the technology.

Key Benefits of Explainable AI

1. Enhanced Transparency

XAI provides detailed insights into AI decision-making, helping businesses understand the “why” behind each outcome. This is particularly valuable in regulated industries like healthcare and fintech, where compliance is critical.

2. Improved Client Trust

When clients can see how decisions are made, they are more likely to trust the AI system. This trust fosters long-term partnerships and encourages collaboration.

3. Risk Mitigation

Explainable AI helps identify and address biases or errors in the system, reducing the risk of costly mistakes and legal complications.

4. Better Decision-Making

With clear insights into AI processes, businesses can make more informed decisions, optimizing operations and driving growth.

5. Regulatory Compliance

Many industries are subject to strict regulations that require transparency in decision-making processes. XAI ensures that businesses can meet these regulatory requirements, avoiding fines and legal issues.

How aiblux Delivers Trustworthy AI Solutions

At aiblux, our mission is to provide Innovation and creativity through tailored solutions that give our clients a competitive advantage. Here’s how we incorporate XAI into our services:

1. Custom AI Development

We design AI systems that align with your unique business needs, ensuring transparency and scalability.

2. End-to-End Services

From conceptualization to deployment, our team ensures every step of the AI development process is clear and understandable.

3. Agile Methodologies

Our approach is rooted in agile practices, allowing for continuous feedback and iteration to deliver the highest quality software.

4. Training and Support

We provide comprehensive training to ensure that your team can effectively use and understand the AI system, fostering trust and confidence.

Real-World Applications of Explainable AI

Healthcare

XAI helps doctors understand AI-driven diagnoses, improving patient outcomes and compliance with medical standards. For example, an AI system can explain why it recommends a specific treatment plan, allowing doctors to make informed decisions.

Fintech

Transparent AI systems justify loan approvals and fraud detection, building trust with clients and regulators. For instance, an AI system can explain why a loan application was rejected, providing the applicant with clear reasoning.

Logistics

AI-powered route optimization becomes more reliable when businesses can trace decision-making processes. For example, an AI system can explain why it chose a specific route, helping logistics companies optimize their operations.

Challenges and Solutions in Implementing XAI

While Explainable AI offers numerous benefits, it also presents challenges:

1. Complexity vs. Simplicity

Balancing technical accuracy with user-friendly explanations can be challenging. Solution: Focus on intuitive interfaces and visualizations.

2. Performance Trade-offs

XAI models may require additional computational resources. Solution: Optimize algorithms and leverage cloud-based solutions for scalability.

3. Data Privacy Concerns

Ensuring transparency without compromising sensitive data is crucial. Solution: Implement robust security measures and compliance frameworks.

4. User Adoption

Encouraging users to trust and adopt XAI systems can be challenging. Solution: Provide comprehensive training and support to help users understand and use the system effectively.

Future Trends in Explainable AI

As AI continues to evolve, XAI will play an increasingly important role in shaping ethical and trustworthy AI systems. Trends to watch include:

1. Integration with AI Governance Frameworks

XAI will become an integral part of AI governance frameworks, ensuring that AI systems are transparent and accountable.

2. Advancements in Natural Language Processing (NLP)

Advances in NLP will make AI explanations more accessible and user-friendly, helping users understand complex AI systems.

3. Industry-Specific XAI Solutions

As XAI becomes more widely adopted, industry-specific solutions will emerge, tailored to the unique needs of sectors like healthcare, fintech, and logistics.

Conclusion

Explainable AI is not just a technological advancement—it’s a pathway to building trust, ensuring accountability, and driving innovation. By adopting XAI, businesses can enhance transparency, mitigate risks, and foster stronger client relationships. At aiblux, we are committed to delivering client-centric software solutions that empower businesses to thrive in the digital age.

Ready to transform your business with Explainable AI? Contact aiblux today to explore our tailored AI solutions and discover how we can help you build trust and drive innovation.