In the World of AI Algorithms and Computational Complexity: A Deep Dive into the Core of Machine Intelligence

The concept of computational complexity in AI algorithms has roots that trace back to the early development of the AI field itself, where pioneers focused on understanding the efficiency and limitations of algorithms for tackling complex problems. As AI algorithms grew more sophisticated and capable over time, computational complexity emerged as a vital metric for assessing their performance and feasibility in practical applications.

Artificial Intelligence (AI) is revolutionizing industries worldwide, from healthcare and finance to manufacturing and media. At the heart of this transformation are powerful algorithms, tackling tasks once thought impossible for machines. Yet, beneath the surface lies a complex web of algorithms and computational principles driving these intelligent systems. In this post, we explore the essential components in the world of AI algorithms and computational complexity, examining the critical role of algorithms, the impact of computational complexity, and how these two fields define AI’s capabilities and limitations in today’s digital landscape.

What Are AI Algorithms?

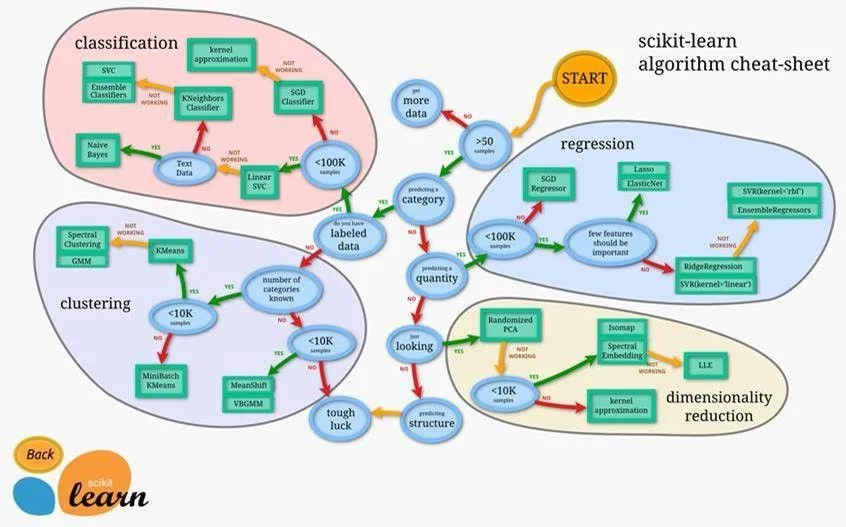

Simply put, AI algorithms are sets of instructions that enable machines to make decisions or solve problems. Unlike traditional algorithms with fixed steps, AI algorithms incorporate more dynamic techniques like pattern recognition, predictive modeling, and the capacity to improve through learning. They are commonly categorized into three types:

1) Supervised Learning Algorithms:

These algorithms are trained on labeled data, where each input is paired with an output label. Popular supervised learning algorithms include:

a) Linear Regression:

Use to predict continuous values.

b) Neural Networks:

Central to deep learning, useful for both classification and regression.

c) Support Vector Machines (SVM):

Suited for classification tasks

2) Unsupervised Learning Algorithms:

These work without labeled data, uncovering hidden patterns or groupings within the dataset. Examples include:

a) K-Means Clustering:

Widely used in market segmentation and customer analysis.

b) Principal Component Analysis (PCA):

Helps in data compression and visualization.

c) Autoencoders:

Neural networks that efficiently encode data, often used for anomaly detection.

3) Reinforcement Learning Algorithms:

These algorithms learn by trial and error, refining actions to maximize rewards. They are essential in robotics, gaming, and real-world applications such as autonomous driving. Key methods include:

a) Q-Learning:

A model-free reinforcement learning approach.

b) Deep Q-Networks (DQNs):

Combine Q-learning with deep neural networks for enhanced performance.

c) Policy Gradient Methods:

Focus on optimizing policies directly to increase rewards.

Each type of algorithm has distinct advantages and challenges, selected based on factors like data type, computational resources, and specific problem needs. Understanding these distinctions is crucial for leveraging AI effectively.

What is Computational Complexity?

In AI, the computational complexity of algorithms describes the amount of computational resources—such as time and memory—needed to run an algorithm for a specific input. This concept involves analyzing the theoretical efficiency of algorithms and classifying them based on their computational demands. By assessing the computational complexity of AI algorithms, researchers and professionals gain valuable insights into their scalability, suitability for large-scale applications, and potential trade-offs between resource usage and performance. This evaluation is crucial for optimizing AI algorithms for real-world deployment.

The Importance of Computational Complexity:

In AI, solving a problem isn’t just about finding a solution but also about executing it efficiently. Computational complexity evaluates the resources—such as time and memory—needed to solve a problem, which is essential for determining the feasibility of algorithms on large datasets or real-time applications.

1) Time Complexity:

This measures the number of operations an algorithm needs as input size increases. For example, In linear algorithms scale proportionally with input size, while exponential algorithms quickly become impractical for even moderately large inputs.

2) Space Complexity:

This calculates the memory an algorithm requires to function, which is critical in AI, especially when processing large datasets.

Significance of computational complexity

Understanding and analyzing the computational complexity of common AI algorithms is crucial, as it directly impacts an algorithm’s suitability for real-world applications, particularly in fields where efficiency, speed, and resource optimization are essential. Evaluating the computational complexity enables effective comparison between algorithms, helping practitioners choose the most efficient and practical solutions for specific tasks. This analysis is instrumental in driving advancements in AI technology and accelerating its widespread adoption across industries, ultimately enhancing the scalability and performance of AI solutions.

The further significance of computational complexity in AI algorithms lies in its ability to highlight the trade-offs between performance and resource consumption, which is essential in optimizing AI systems for various environments, from cloud platforms to edge devices. By thoroughly understanding computational complexity, developers can design more scalable and efficient algorithms that maximize performance while minimizing costs and energy usage, making AI more accessible and sustainable across industries. This depth of analysis also fosters innovation, enabling breakthroughs in processing large-scale datasets, real-time analytics, and autonomous decision-making applications.

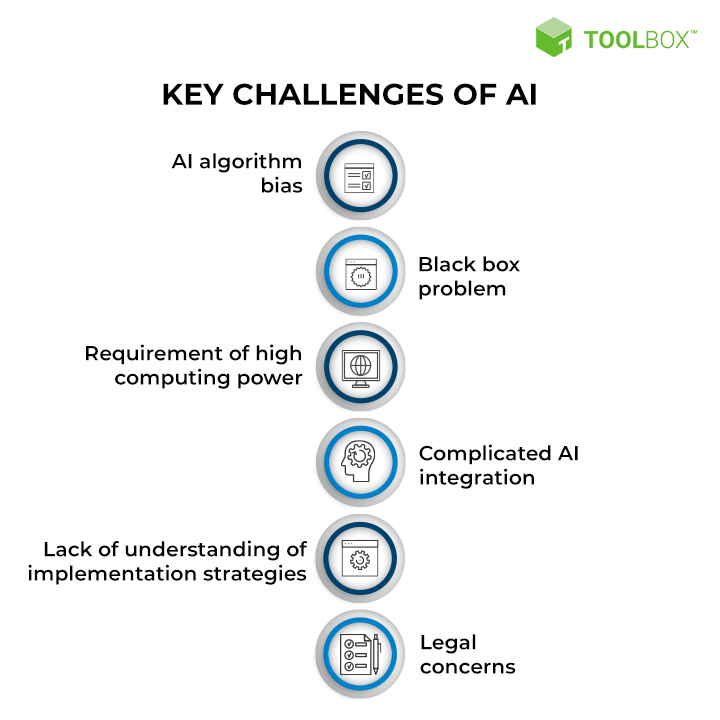

Key Challenges in AI Algorithms and Complexity

AI is advancing at an unprecedented pace, but with it come notable challenges. Some of the main challenges include:

1) Scalability:

Many AI applications involve vast amounts of data, and traditional algorithms may become infeasible due to their complexity. New methods such as distributed computing and parallel processing help address this, but they introduce additional layers of complexity.

2) Interpretability:

As algorithms become more complex (especially deep neural networks), understanding why an algorithm makes a specific decision becomes harder. This lack of transparency can be problematic in high-stakes areas like healthcare or finance.

3) Bias and Fairness:

AI algorithms learn from data, and if the data itself is biased, the algorithms can amplify these biases. For example, facial recognition algorithms trained primarily on light-skinned faces may perform poorly on darker-skinned individuals.

4) Ethical Concerns:

The power of AI comes with ethical considerations. Algorithms used in surveillance, content moderation, and autonomous vehicles all have ethical implications that need to be carefully managed.

Optimizing AI Algorithms: The Role of Quantum Computing

One potential solution to overcoming computational limitations in AI lies in quantum computing. Quantum computers are expected to perform certain computations exponentially faster than classical computers. For example:

1) Quantum Algorithms for Machine Learning:

Quantum computing can potentially reduce the complexity of machine learning tasks, making previously intractable problems solvable. For instance, Grover’s algorithm can search an unsorted database quadratically faster than any classical algorithm.

2) Quantum Neural Networks:

By leveraging quantum bits (qubits), researchers are exploring quantum neural networks that could operate with higher efficiency and improved processing speed.

Real-World Impact and Future of AI Algorithms and Complexity

AI is everywhere, from powering virtual assistants like Siri and Alexa to driving autonomous vehicles and recommending movies on streaming platforms. As AI continues to advance, the algorithms and computational complexity behind them will play a critical role in determining what’s possible.

Predictions for the future include:

1) Increased Use of Hybrid Algorithms:

Combining various types of algorithms—like mixing supervised and reinforcement learning—could lead to more adaptable AI.

2) Automation of Complex Tasks:

As AI becomes more efficient, it will be able to automate complex tasks, potentially disrupting traditional industries.

3) Enhanced Human-AI Collaboration:

With more intuitive algorithms, AI can assist humans in making better decisions, providing insights across multiple fields.

Conclusion

In the world of AI algorithms and computational complexity, knowledge may seem complex and technical, but they shape the way we interact with technology in everyday life. It is important to comprehensively understand and evaluate the computational complexity of the AI algorithms. In this way, practitioners and researchers are able to drive innovation while also enhancing the operational efficiency and mobilize the full potential of the AI technologies. Hence, it can be concluded that the computational complexity of the AI algorithms plays a central role in AI research and development, providing the far- reaching positive implications for the real- world applications. For further information Contact AIBLUX.