Figure Drawing on the UI Using Hand Gesture Recognition with Deep Learning

Overview

In this project, a system was developed that enables users to draw figures on a user interface (UI) using hand gestures recognized by a deep learning model. The project leverages computer vision and deep learning techniques to interpret hand gestures in real-time, translating them into drawing commands on a digital canvas. This application aims to provide an intuitive and interactive way for users to create digital drawings without the need for traditional input devices like a mouse or stylus, enhancing accessibility and creativity in digital art and design.

Objectives

- Hand Gesture Recognition: Develop a robust deep learning model capable of accurately recognizing a variety of hand gestures in real-time.

- Real-Time Figure Drawing: Implement a system that translates recognized hand gestures into drawing commands on a UI, enabling users to draw figures dynamically.

- User-Friendly Interface: Create an intuitive UI that responds seamlessly to hand gestures, providing a smooth and engaging user experience.

- Performance Optimization: Ensure the system operates efficiently in real-time, with minimal latency, to maintain a fluid drawing experience.

- Evaluation: Assess the accuracy of gesture recognition and the responsiveness of the drawing interface, refining the system based on user feedback and performance metrics.

Technical Architecture

Input Acquisition:

- The system uses a webcam or a depth camera (e.g., Kinect, Intel RealSense) to capture live video feed of the user’s hand movements.

- The input is pre-processed to ensure consistency in lighting and background, enhancing the accuracy of gesture recognition.

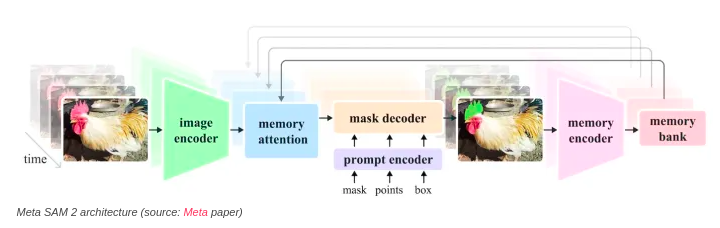

Hand Gesture Recognition Using Deep Learning:

- A deep learning model, typically based on Convolutional Neural Networks (CNNs) or a combination of CNNs and Recurrent Neural Networks (RNNs), is employed to recognize hand gestures from the video feed.

- The model is trained on a large dataset of hand gestures, covering various actions such as drawing lines, circles, erasing, and other control gestures.

- Techniques like transfer learning, data augmentation, and real-time inference optimizations are used to enhance the model’s performance and accuracy.

Gesture-to-Command Mapping:

- Recognized gestures are mapped to specific drawing commands. For example, a “pointing” gesture might initiate a drawing action, while an “open hand” gesture might stop drawing.

- The system supports a range of gestures, allowing users to draw shapes, lines, curves, and even perform actions like undo, redo, and clear.

UI Drawing Canvas:

- The UI features a digital canvas where users can see the results of their gestures in real-time.

- The drawing commands generated from hand gestures are rendered on the canvas using a graphics library such as Pygame or OpenCV.

- The UI includes controls for selecting drawing tools, colors, and brush sizes, all of which can be manipulated via hand gestures.

Feedback Loop and Refinement:

- The system provides visual feedback to the user, such as highlighting the recognized gesture or showing a preview of the intended action before it is executed.

- Continuous refinement is possible through machine learning techniques, where the system learns from user interactions to improve gesture recognition accuracy over time.

Tech Stack

- Programming Languages: Python

- Frameworks and Libraries:

- OpenCV: For real-time video capture, hand detection, and UI rendering.

- TensorFlow/Keras or PyTorch: For building and training the deep learning model for hand gesture recognition.

- MediaPipe: For hand tracking and gesture recognition (optional, depending on the approach).

- Pygame: For implementing the drawing canvas and UI elements.

- Tools:

- Jupyter Notebooks: For prototyping and experimenting with model training.

- Docker: For containerization and deployment of the application.

- Git: For version control and collaborative development.

- Webcam or Depth Camera: For capturing live hand gestures.

Conclusion

This project successfully demonstrates how deep learning can be harnessed to create an innovative and interactive figure drawing system based on hand gesture recognition. By replacing traditional input methods with gesture-based controls, the system opens up new possibilities for digital art and design, making these creative activities more accessible and engaging.

The integration of a real-time gesture recognition model with a responsive UI ensures a fluid and natural drawing experience, providing users with an intuitive tool for creative expression. The project highlights the potential of deep learning in enhancing human-computer interaction, particularly in areas where traditional interfaces may fall short.

Future enhancements could include expanding the range of recognizable gestures, improving the system’s accuracy and responsiveness, and integrating the application with other creative tools or platforms. Overall, this project serves as a strong foundation for further exploration into gesture-based UI interactions and their applications in various domains.

For more information on how aiblux can help you with custom software solutions, contact us or explore our services.