We specialize in building and providing custom data-driven enterprise solutions using the latest technologies to address unique business challenges.

Germany, UAE, Pakistan

+92 302 9777 379

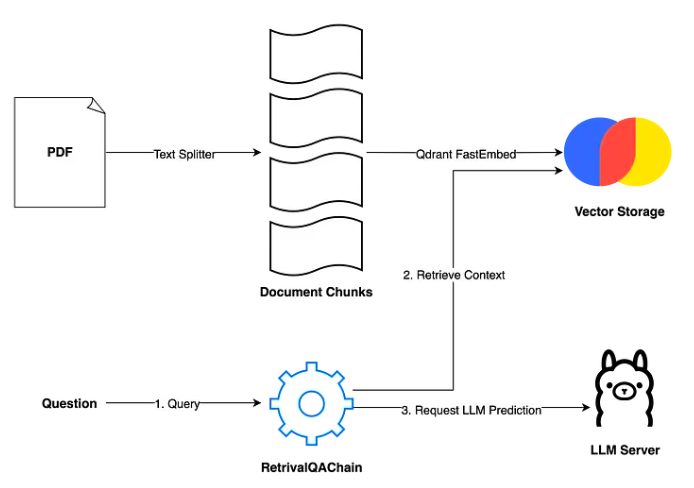

Our website features a state-of-the-art Retrieval-Augmented Generation (RAG) chatbot designed to deliver accurate and contextually relevant responses by leveraging a sophisticated combination of document chunking, vector storage, and language model predictions. This innovative chatbot integrates seamlessly with PDF documents, providing users with precise information retrieval capabilities for enhanced user experience and productivity.

The RAG-based chatbot operates through a well-defined pipeline, ensuring efficient processing and retrieval of information. Below is a detailed breakdown of the pipeline:

Text Splitting:

Vector Storage:

Retrieval and Contextualization:

LLM Prediction:

Technologies Used in the RAG-based Chatbot Code

1. Python

2. PyMuPDF (Fitz)

3. Langchain

4. FAISS (Facebook AI Similarity Search)

5. Hugging Face Embeddings

6. Ollama API

Our RAG-based chatbot represents a significant advancement in the field of information retrieval and conversational AI. By integrating robust document chunking, advanced vector storage, and powerful language models, the chatbot provides users with quick, accurate, and contextually relevant responses to their queries. This project showcases our commitment to leveraging cutting-edge technology to enhance user interactions and deliver exceptional value.

For more information on how aiblux can help you with custom software solutions, contact us or explore our services.